Over the previous week, the specter of Meta’s probably intrusive knowledge monitoring has as soon as once more raised its head, this time as a result of launch of its new, customized AI chat app, in addition to latest testimony introduced by former Meta worker Sarah Wynn-Williams.

Within the case of Williams, who’s written a tell-all guide about her time working at Meta, latest revelations in her look earlier than the U.S. Senate have raised eyebrows, with Wynn-Williams noting, amongst different factors, that Meta can establish when customers are feeling nugatory or helpless, which it could use as a cue for advertisers.

As reported by the Enterprise and Human Rights Useful resource Middle:

“[Wynn-Williams] stated the corporate was letting advertisers know when the teenagers had been depressed so that they may very well be served an advert at one of the best time. For instance, she prompt that if a teen lady deleted a selfie, advertisers would possibly see that as a very good time to promote her a magnificence product as she might not be feeling nice about her look. Additionally they focused teenagers with advertisements for weight reduction when younger women had issues round physique confidence.”

Which sounds horrendous, that Meta would knowingly goal customers, and teenagers no much less, at particularly susceptible instances with promotions.

Within the case of Meta’s new AI chatbot, issues have been raised as to the extent at which it tracks consumer data, in an effort to personalize its responses.

Meta’s new AI chatbot makes use of your established historical past, primarily based in your Fb and Instagram profiles, to customise your chat expertise, and it additionally tracks each interplay that you’ve got with the bot to additional refine and enhance its responses.

Which, in line with The Washington Submit, “pushes the boundaries on privateness in ways in which go a lot additional than rivals ChatGPT, from OpenAI, or Gemini, from Google.”

Each are vital issues, although the concept that Meta is aware of a heap about you and your preferences is nothing new. Consultants and analysts have been warning about this for years, however with Meta locking down its knowledge, following the Cambridge Analytica scandal, it’s pale as a difficulty.

Add to this the truth that most individuals clearly favor comfort over privateness, as long as they will largely ignore that they’re being tracked, and Meta has usually been in a position to keep away from ongoing scrutiny for such, by, basically, not speaking about its monitoring and predictive capability.

However there are many examples that underline simply how highly effective Meta’s trove of consumer knowledge may be.

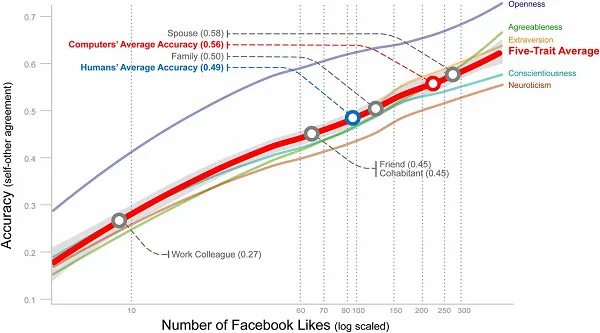

Again in 2015, for instance, researchers from the College of Cambridge and Stanford College launched a report which checked out how folks’s Fb exercise may very well be used as an indicative measure of their psychological profile. The examine had discovered that, primarily based on their Fb likes, mapped in opposition to their solutions from a psychological examine, the insights might decide an individual’s psychological make-up extra precisely than their associates, their household, higher even than their companions.

Fb’s true power on this sense, is scale. For instance, the data that you just enter into your Fb profile, in isolation, doesn’t imply a heap. You would possibly like cat movies, Coca-Cola, possibly you go to Pages about sure bands, manufacturers and so forth. By themselves, these actions may not reveal that a lot, however on a broader scale, every of those components may be indicative. It may very well be, for instance, that individuals who like this particular mixture of issues have an 80% probability of being a smoker, or a felony, or a racist, whether or not they particularly point out such or not.

A few of these indicators are extra overt, others require extra insights. However principally, your Fb exercise does present who you’re, whether or not you meant to share that or not. We’re simply not confronted with it, outdoors of advert placements, and with private posting to Fb declining in latest instances, Meta’s additionally misplaced a few of its knowledge factors, so that you’d assume that its predictions are possible not as correct as they as soon as had been.

However with Meta AI now internet hosting more and more private chats, on a broad vary of subjects, Meta now has a brand new stream of connection into our minds, which is able to certainly showcase, as soon as once more, simply how a lot Meta does know you, and what your private preferences and leanings could also be.

Which it does certainly use for advertisements.

Meta does be aware in its AI documentation that “details that comprise inappropriate data or are unsafe in nature” will not be saved, whereas you may as well delete the main points that Meta AI saves about you at any time.

So that you do have some choices on this entrance. However when you wanted a reminder, Meta is monitoring a heap of non-public data, and it has unmatched scale to crosscheck that knowledge in opposition to, which supplies it an enormous quantity of embedded understanding about consumer preferences, pursuits, leanings, and so forth.

All of those may very well be used for advert focusing on, content material promotion, affect, and so forth.

And sure, that may be a concern, which is price exploring. However once more, over time, and given variable controls over their knowledge, the capability to restrict data that Fb tracks, their privateness settings, and so forth. Regardless of all of those choices, analysis exhibits that most individuals merely don’t limit such.

Comfort trumps privateness, and Meta will likely be hoping the identical rings true for its AI chatbot as effectively. That’s additionally why its Benefit+ AI-powered advertisements are producing outcomes, and as its AI instruments get smarter, and enhance Meta’s capability to investigate knowledge at scale, Meta’s going to get even higher at figuring out all the things about you, as revealed by your Fb and Instagram presence.

And now you AI chats as effectively. Which is able to certainly imply a extra customized expertise. However the pay-off right here is that Meta will even use that understanding in methods you could not agree with.