Meta has taken one other step ahead with its AI plans, with the launch of its Llama 4 AI fashions, which, in testing, have confirmed to supply higher efficiency on just about all fronts than its opponents.

Nicely, at the least primarily based on the outcomes that Meta’s chosen to launch, however we’ll get to that.

First off, Meta’s introduced 4 new fashions that embrace a lot bigger systematic coaching and parameter inference than earlier Llama fashions.

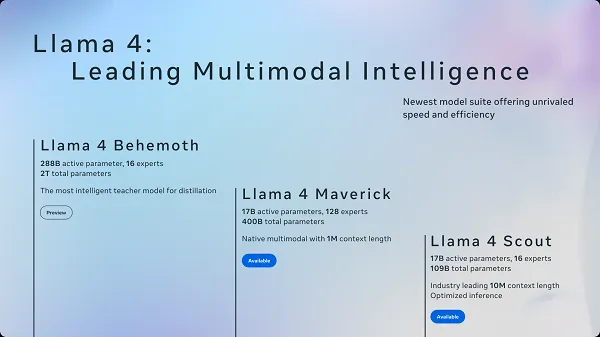

Meta’s 4 (sure, 4, the final one will not be featured on this picture) new Llama fashions are:

- Llama 4 Scout instantly turns into the quickest small mannequin accessible, and has been designed to run on a single GPU. Scout contains 17 billion parameters and 16 specialists, which allows the system to optimize its responses primarily based on the character of every question.

- Llama 4 Maverick additionally features a 17 billion parameter useful resource, but in addition incorporates 128 specialists. The usage of “specialists” signifies that solely a subset of the entire parameters are activated for every question, enhancing mannequin effectivity by reducing mannequin serving prices and latency. That signifies that builders using these fashions can get comparable outcomes with much less compute.

- Llama 4 Behemoth contains greater than 2 trillion parameters, making it the most important system at the moment accessible. That, at the least in concept, offers it way more capability to have the ability to perceive and reply to queries with superior studying and inference.

- Llama 4 Reasoning is the ultimate mannequin, which Meta hasn’t shared a lot data on as but.

Every of those fashions serves a special goal, with Meta releasing variable choices that may be run with much less or extra highly effective programs. So for those who’re seeking to construct your individual AI system, you should use Llama Scout, the smallest of the fashions, which might run on a single GPU.

So what does this all imply in layman’s phrases?

To make clear, every of those programs is constructed on a variety of “parameters” which were established by Meta’s growth staff to enhance systematic reasoning. These parameters should not the dataset itself (which is the language mannequin) however the quantity of controls and prompts constructed into the system to grasp the info that it’s .

So a system with 17 billion parameters will ideally have a greater logic course of than one with fewer parameters, as a result of it’s asking questions on extra elements of every question, and responding primarily based on that context.

For instance, for those who had a 4 parameter mannequin, it might principally be asking “who, what, the place, and when”, with every further parameter including increasingly more nuance. Google Search, as one thing of a comparability, makes use of over 200 “rating alerts” for every question that you simply enter, so as to offer you a extra correct outcome.

So you possibly can think about how a 17 billion parameter course of would increase this.

And Llama 4’s parameters are greater than double the scope of Meta’s earlier fashions.

For comparability:

So, as you possibly can see, over time, Meta’s constructing in additional system logic to ask extra questions, and dig additional into the context of every request, which ought to then additionally present extra related, correct responses primarily based on this course of.

Meta’s “specialists”, in the meantime, are a brand new ingredient inside Llama 4, and are systematic controls that outline which of these parameters needs to be utilized, or not, to every question. That reduces compute time, whereas nonetheless sustaining accuracy, which ought to be certain that exterior initiatives using Meta’s Llama fashions will be capable of run them on decrease spec programs.

As a result of actually no person has the capability that Meta does on this entrance.

Meta at the moment has round 350,000 Nvidia H100 chips powering its AI initiatives, with extra coming on-line because it continues to increase its information middle capability, whereas it’s additionally growing its personal AI chips that look set to construct on this even additional.

OpenAI reportedly has round 200k H100s in operation, whereas xAI’s “Colossus” tremendous middle is at the moment working on 200k H100 chips as properly.

So Meta is probably going now working at double the capability of its opponents, although Google and Apple are additionally growing their very own approaches to the identical.

However when it comes to tangible, accessible compute and sources, Meta is fairly clearly within the lead, with its newest Behemoth mannequin set to blow all different AI initiatives out of the water when it comes to general efficiency.

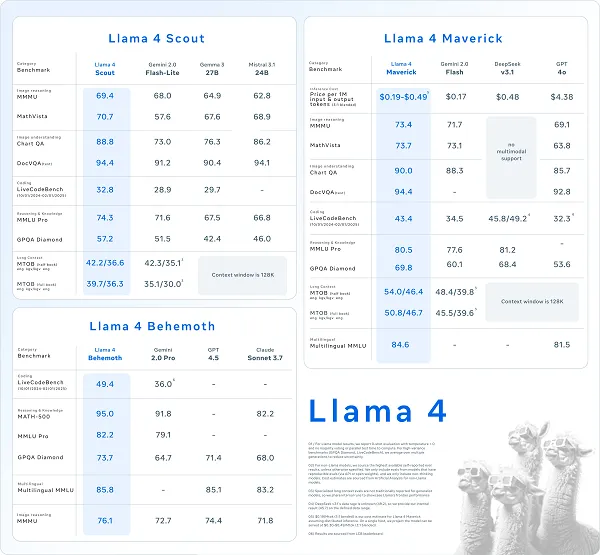

You may see a comparability of comparative efficiency between the most important AI initiatives in this chart, although some questions have additionally been raised as to the accuracy and applicability of Meta’s testing course of, and the benchmarks it’s chosen to match its Llama fashions towards.

It’ll come out in testing, and in consumer expertise both manner, however it’s also price noting that not all the outcomes produced by Llama 4 have been as mind-blowing as Meta’s appears to counsel.

However general, it’s seemingly driving higher outcomes, on all fronts, whereas Meta additionally says that the decrease entry fashions are cheaper to entry, and higher, than the competitors.

Which is necessary, as a result of Meta’s additionally open sourcing all of those fashions to be used in exterior AI initiatives, which might allow third-party builders to construct new, devoted AI fashions for various goal.

It’s a major improve both manner, which stands to place Meta on the highest of the heap for AI growth, whereas enabling exterior builders to make the most of its Llama fashions additionally stands to make Meta the important thing load-bearing basis for a lot of AI initiatives.

Already, LinkedIn and Pinterest are among the many many programs which can be incorporating Meta’s Llama fashions, and because it continues to construct higher programs, it does look like Meta is profitable out within the AI race. As a result of all of those programs have gotten reliant on these fashions, and as they do, that will increase their reliance on Meta, and its ongoing Llama updates, to energy their evolution.

However once more, it’s onerous to simplify the relevance of this, given the complicated nature of AI growth, and the processes which can be required to run such.

For normal customers, probably the most related a part of this replace would be the improved efficiency of Meta’s personal AI chatbot and technology fashions.

Meta’s additionally integrating its Llama 4 fashions into its in-app chatbot, which you’ll be able to entry through Fb, WhatsApp, Instagram, and Messenger. The up to date system processing may also change into a part of Meta’s advert concentrating on fashions, its advert technology programs, its algorithmic fashions, and many others.

Mainly, each side of Meta’s apps that make the most of AI will now get smarter, by utilizing extra logical parameters inside their evaluation, which ought to end in extra correct solutions, higher picture generations, and improved advert efficiency.

It’s troublesome to totally quantify what this may imply on a case-by-case foundation, as particular person outcomes could differ, however I might counsel contemplating Meta’s Benefit+ advert choices as an experiment to see simply how good its efficiency has change into.

Meta shall be integrating its newest Llama 4 fashions over the approaching weeks, with extra upgrades nonetheless coming on this launch.