Meta’s banning teen entry to its AI chatbots in numerous personas, because it seems to be to implement a brand new system that can guarantee extra security of their utilization, and handle issues about teenagers getting dangerous recommendation from these choices.

Which is smart, given the issues already raised round AI bot interplay, however actually, these would apply to everybody, not simply teen customers.

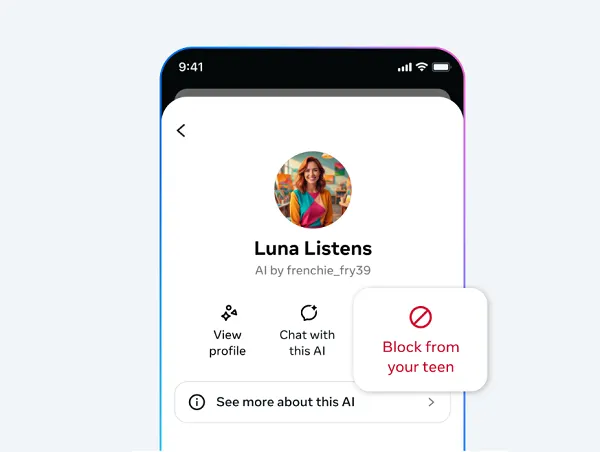

Again in October, Meta rolled out some new choices for mother and father to regulate how their youngsters work together with AI profiles in its apps, amid issues that some AI chatbots had been offering doubtlessly dangerous recommendation and steering to teenagers.

Certainly, a number of AI chatbots had been discovered to be giving teenagers harmful steering on self-harm, disordered consuming, easy methods to purchase medicine (and conceal it out of your mother and father), and many others.

That sparked the launch of an FTC investigation into the potential dangers of AI chatbot interplay, and in response to that, Meta added extra management choices that might allow mother and father to restrict how their youngsters have interaction with its AI chatbots, with a view to mitigate the potential concern.

To be clear, Meta’s not alone on this. Snapchat has additionally needed to change the principles round using its “My AI” chatbot as properly, whereas X has had its personal latest large-scale points with folks utilizing its Grok chatbot to generate offensive pictures.

Generative AI remains to be in its infancy, and as such, it’s nearly not possible for builders to counter for each potential misuse. Besides, these makes use of by teenagers appear fairly straightforward to foretell, which probably displays the broader push for speedy growth over threat.

In any occasion, Meta’s implementing new controls over its AI bots to deal with this concern. However because it does so, it’s now determined to quickly lower off teen entry to its AI chatbots totally (besides Meta AI).

As per Meta:

“In October, we shared that we’re constructing new instruments to provide mother and father extra visibility into how their teenagers use AI, and extra management over the AI characters they’ll work together with. Since then, we’ve began constructing a brand new model of AI characters, to provide folks an excellent higher expertise. Whereas we deal with growing this new model, we’re quickly pausing teenagers’ entry to present AI characters globally.”

I imply, that doesn’t sound nice. It appears like extra issues have been raised, whereas clearly, the FTC probe could be taking part in on the minds of Meta execs, as a possible penalty looms over any and all misuse.

“Beginning within the coming weeks, teenagers will now not be capable to entry AI characters throughout our apps till the up to date expertise is prepared. This can apply to anybody who has given us a teen birthday, in addition to individuals who declare to be adults however who we suspect are teenagers primarily based on our age prediction expertise. Which means that, after we ship on our promise to provide mother and father extra oversight of their teenagers’ AI experiences, these parental controls will apply to the most recent model of AI characters.”

So Meta’s tacitly admitting that there are some important flaws in its AI chatbots, which should be addressed. And whereas teenagers will nonetheless be capable to entry its most important Meta AI chatbot, they gained’t be capable to work together with customized bot personas inside its apps.

Will which have a big effect?

Properly, a examine revealed final July discovered that 72% of U.S. teenagers have already used an AI companion, with lots of them now conducting common social interactions with their chosen digital associates.

Mix this with Meta’s push to introduce a military of AI chatbot personas into its apps, as a way to spice up engagement, and it in all probability does put a spanner within the works for its broader plans.

However the largest query is how one can presumably safeguard AI interplay of this type, provided that AI bots are studying from the web, and can modify their responses primarily based on the question.

There are various methods to trick AI chatbots into supplying you with responses that their builders would favor they don’t present, however as a result of they’re matching up language on such a big scale, it’s not possible to account for each variation on this respect.

And digital native teenagers are extraordinarily savvy, and can be on the lookout for weak factors in these techniques.

As such, the transfer to limit entry totally is smart, however I’m unsure how Meta will be capable to develop efficient safeguards in opposition to future issues.

So possibly simply don’t implement AI chatbots in numerous personas that current themselves as actual folks.

There’s no actual want for them in any context, and so they additionally arguably run counter to the “social” side of social media anyway, because it’s not “social,” in our extra widespread understanding of the time period, to speak to a pc.

Social media was designed to facilitate human interplay, and the push to dilute that with AI bots appears at odds with this. I imply, I get why Meta would wish to do it, as extra AI bots partaking like actual folks in its apps, liking posts, leaving feedback, all of this stuff will make actual human customers really feel particular, and can encourage extra posting habits, and time spent.

I do know why Meta would wish to implement such, however I don’t assume the dangers of such have been absolutely assessed, particularly when you think about the potential impacts of growing relationships with non-human entities, and what which may do for folks’s notion and psychological state.

Meta’s admitting that this might be an issue in teenagers. However actually, it might be an issue throughout the board, and I don’t consider that the worth of those bots, from a consumer perspective, would outweigh that potential threat.

No less than till we all know extra. No less than till we’ve received some large-scale research that present the impacts of AI bot interplay, and whether or not it’s a superb or dangerous factor for folks.

So why cease at banning them for teenagers, why not ban them for everybody till we have now extra perception?

I do know this gained’t occur, however this looks like an admission that this can be a extra important space of concern than Meta anticipated.