Given the most recent revelations about xAI’s Grok producing illicit content material en masse, generally of minors, this looks like a well timed overview.

The group from the Way forward for Life Institute just lately undertook a security evaluation of among the hottest AI instruments available on the market, together with Meta AI, OpenAI’s ChatGPT, Grok, and extra.

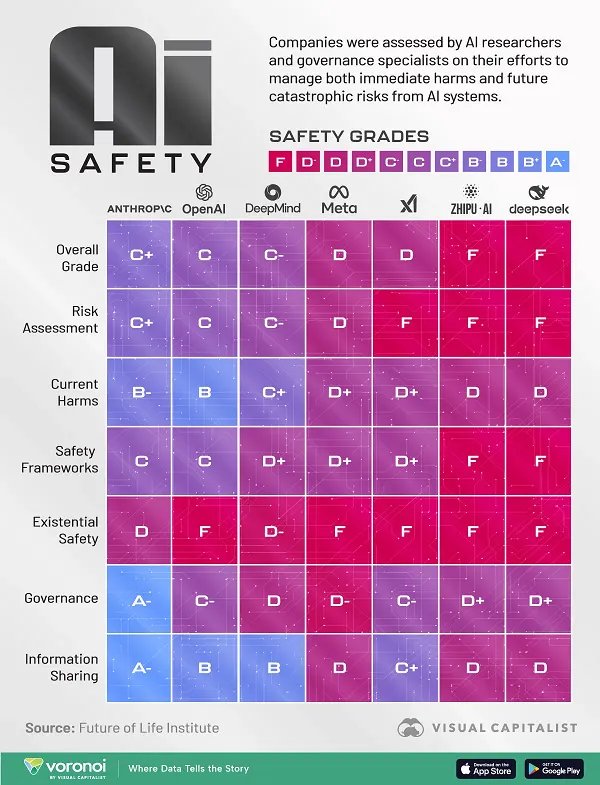

The evaluation checked out six key parts:

- Danger evaluation – Efforts to make sure that the instrument can’t be manipulated or used for hurt

- Present harms – Together with information safety dangers and digital watermarking

- Security frameworks – The method every platform has for figuring out and addressing danger

- Existential security – Whether or not the undertaking is being monitored for surprising evolutions in programming

- Governance – The corporate’s lobbying on AI governance and AI security rules

- Data sharing – System transparency and perception into the way it works

Primarily based on these six parts, the report then gave every AI undertaking an total security rating, which displays a broader evaluation of how every is managing developmental danger.

The group from Visible Capitalist have translated these outcomes into the beneath infographic, which gives some extra meals for thought of AI growth, and the place we could be heading (particularly with the White Home trying to take away potential impediments to AI growth).