A research printed by science journal Nature has examined the impression of Elon Musk’s adjustments to X/Twitter, and descriptions how X’s algorithm shapes political attitudes, and leans in the direction of conservative views.

The outcomes aren’t any shock, provided that numerous different research have indicated comparable leanings and affect. However this newest report, based mostly on an evaluation of almost 5,000 contributors, offers large-scale perception into X’s political persuasiveness, and the way the platform’s method beneath Musk has was a key software for driving Republican and Impartial help within the U.S.

The research was carried out in 2023, round six months after Elon Musk took over on the app. Members, who had been all X customers already, had been paid to stay to both the chronological X feed or the algorithm-powered For You feed for seven weeks.

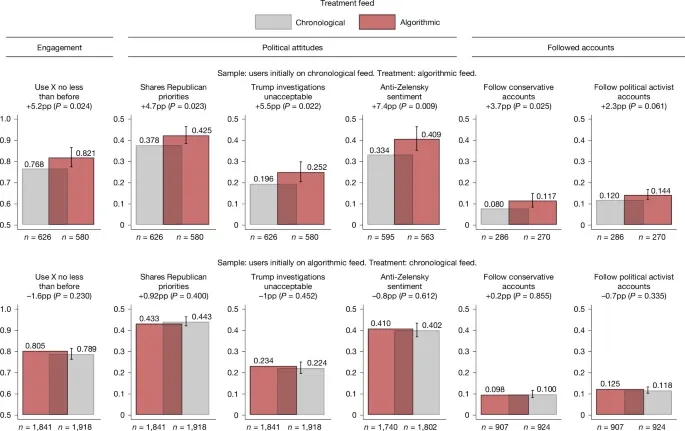

Primarily based on this pattern set, contributors had been measured on how they felt about key political points earlier than and after publicity. The outcomes confirmed that those that had been on the algorithmic feed leaned a lot additional in the direction of conservative viewpoints.

As per the report: “We discovered that the algorithm promotes conservative content material and demotes posts by conventional media. Publicity to algorithmic content material leads customers to observe conservative political activist accounts, which they proceed to observe even after switching off the algorithm. These outcomes counsel that preliminary publicity to X’s algorithm has persistent results on customers’ present political attitudes and account-following behaviour, even within the absence of a detectable impact on partisanship.”

As demonstrated in these charts, with the highest line being customers who had been uncovered to the algorithmic feed, and the underside being the chronological feed customers, customers who had been proven the algorithm-defined feed ended up utilizing X extra, and leaning additional in the direction of conservative viewpoints, whereas these on the chronological timeline noticed no actual change of their attitudes because of this.

Which, once more, is not any shock.

Final 12 months, Sky Information carried out a month-long experiment on X to measure political bias and located that X “reveals a big algorithmic bias towards selling far-right and excessive content material.”

The Wall Road Journal and the Washington Put up have additionally printed their very own separate evaluation studies, each of which reveal how X now amplifies right-leaning political content material over left-leaning views. As well as, Queensland College printed a report that discovered X’s algorithm pushes pro-Republican accounts.

The stability of analysis clearly signifies a stage of political leaning in X’s methods, although which will even be influenced by its bias in opposition to what the platform considers mainstream media, and sowing mistrust in established media shops.

Musk himself has been a pacesetter on this., A separate report by Nature discovered that Musk commonly questions the validity of media studies through his X account, which is essentially the most adopted single profile within the app. Musk amplifies his opinion to 234.8 million followers, whereas studies additionally point out that X’s algorithm seeks to spice up the attain of Musk’s posts particularly, additional boosting his affect.

In some methods, Musk himself could possibly be the perfect indicator of X’s political leanings, and doubtlessly, essentially the most important single issue that drives opinion within the app. And with Musk clearly leaning in the direction of the conservative, it appears logical that X may even lean this fashion.

Additionally price noting is the worth of the algorithmic feed, with these utilizing the algorithmic stream utilizing X extra because of this. For those who ever needed to know why social platforms don’t simply let customers view their timelines in chronological order, and consider solely posts from the accounts that they select to observe, this might be your reply, although that may additionally result in manipulation and affect through algorithmic means.

Perhaps that ought to be an angle that ought to be explored. If platforms had been pressured to remove algorithmic amplification solely it may cut back political affect.