With Australia’s new teen social media restrictions set to come back into impact inside weeks, we’ll quickly have our first vital take a look at of whether or not such restrictions are workable, and certainly, enforceable by regulation.

As a result of whereas help for harder restrictions on social media entry is now at report excessive ranges, the problem lies in precise enforcement, and implementing programs that may successfully detect underage customers. Up to now, no platform has been capable of enact workable age checking at scale, nevertheless all platforms at the moment are creating new programs and processes within the hopes of aligning with these new authorized necessities.

Which additionally look set to grow to be the norm all over the world, following Australia’s lead.

Australia particularly, from December tenth, all social media platforms must “take affordable steps” to limit teenagers underneath the age of 16 from accessing their apps.

To be clear, the minimal age to create an account on all the main social apps is 14, however this new push is designed to extend enforcement, and be certain that every platform is being held accountable for holding younger teenagers out of their apps.

As defined by Australia’s eSafety Fee:

“There’s proof between social media use and harms to the psychological well being and wellbeing of younger individuals. Whereas there may be advantages in social media use, the danger of harms could also be elevated for younger individuals as they don’t but have the abilities, expertise or understanding to navigate complicated social media environments.”

Which is why Australia is enacting this new regulation, with the eSafety Fee now operating adverts in social apps to tell customers of the approaching change.

And as famous, many different areas are additionally contemplating comparable restrictions, based mostly on the rising corpus of proof that social media publicity may be dangerous for younger customers.

Although detection stays a problem, and with out common guidelines on how social apps detect and limit teenagers, authorized enforcement of any such invoice will likely be troublesome.

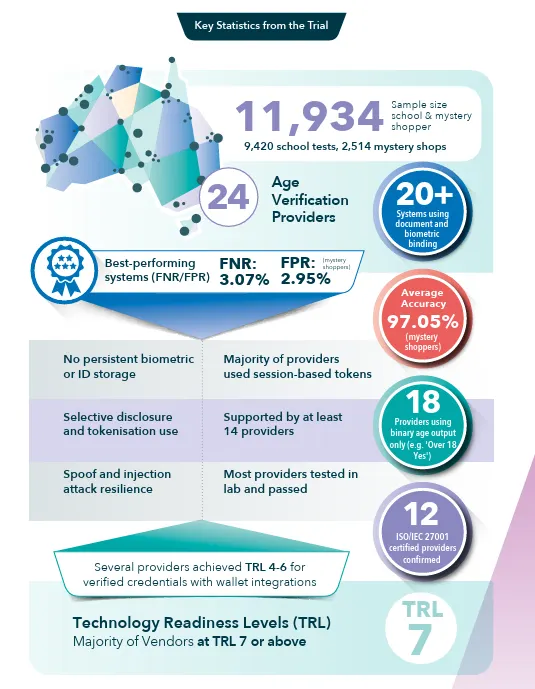

In Australia’s case, it truly performed a collection of exams with numerous age detection measures, together with video selfie scanning, age inference from indicators, and parental consent necessities, as a way to decide whether or not correct age detection is feasible utilizing the most recent know-how, and what the very best method could be.

The abstract outcomes from this report had been:

“We discovered a plethora of approaches that match completely different use instances in several methods, however we didn’t discover a single ubiquitous answer that will go well with all use instances, nor did we discover options that had been assured to be efficient in all deployments.”

Which appears problematic. Moderately than implementing an industry-standard system, which might then be certain that all social platforms underneath these new restrictions can be held to the identical requirements, Australia has as an alternative opted to advise the platforms of those numerous choices, and allow them to select which they wish to use.

The justification right here, as per the above quote, is that completely different know-how has completely different advantages and limitations in various context, and as such, the platforms themselves want to search out the very best answer for his or her use, in alignment with the brand new guidelines.

Although that looks as if a possible authorized loophole, in that if a platform is discovered to be failing to limit younger teenagers, they’ll be capable of argue that they’re taking “affordable steps” with the programs they’ve, whether or not they’re the simplest programs or not.

Given this, YouTube has already refused to abide by the brand new requirements (arguing that it’s a video platform not a social media app), whereas each social platform has opposed the push, arguing that it merely received’t be efficient. One other counterargument right here is that the ban will truly find yourself driving younger individuals to extra harmful corners of the web that aren’t being held to the identical requirements, although Meta and TikTok have stated that they may adhere to the brand new guidelines, regardless that they object to them.

Although after all, given the utilization impacts, you’d anticipate the platforms to push again. Meta reportedly has round 450k Australian customers underneath the age of 16 throughout Fb and IG, whereas TikTok seems set to lose round 200k customers, and Snap has greater than 400k younger teenagers within the area.

As such, perhaps the platforms would push again, regardless of the element, although Meta has additionally carried out a spread of latest age verification measures of its personal to adjust to these, and different proposed teen restrictions.

And once more, many areas are watching Australia’s implementation right here, and contemplating their very own subsequent steps.

France, Greece and Denmark, have put their help behind a proposal to limit social media entry to customers aged underneath 15, whereas Spain has proposed a 16 year-old entry restriction. New Zealand and Papua New Guinea are additionally shifting to implement their very own legal guidelines to limit teen social media entry, whereas the U.Okay. has carried out new laws round age checking, in an effort to power platforms to take extra motion on this entrance.

A technique or one other, extra age restrictions are coming for social apps, in order that they must implement improved measures both manner.

The query now could be round effectiveness in method, and how one can set a transparent {industry} commonplace on this ingredient.

As a result of telling platforms to take “affordable steps,” then leaving them to search out their very own finest manner ahead, goes to result in authorized challenges, which may properly render this push successfully ineffective.