With AI chatbots now prompting us in just about each app and on-line expertise, it’s changing into extra frequent for folks to converse with these instruments, and even really feel a degree of friendship with their favourite AI companions over time.

However that’s a dangerous proposition. What occurs when folks begin to depend on AI chatbots for companionship, even relationships, after which these chatbot instruments are deactivated, or they lose connection in different methods?

What are the societal impacts of digital interactions, and the way will that impression our broader communal course of?

These are key questions, which, largely, are seemingly being missed within the identify of progress.

However just lately, the Stanford Deliberative Democracy Lab has carried out a variety of surveys, at the side of Meta, to get a way of how folks really feel concerning the impression of AI interplay, and what limits needs to be applied in AI engagement (if any).

As per Stanford:

“For instance, how human ought to AI chatbots be? What are customers’ preferences when interacting with AI chatbots? And, which human traits needs to be off-limits for AI chatbots? Moreover, for some customers, a part of the enchantment of AI chatbots lies in its unpredictability or typically dangerous responses. However how a lot is an excessive amount of? Ought to AI chatbots prioritize originality or predictability to keep away from offense?”

To get a greater sense of the overall response to those questions, which may additionally assist to information Meta’s AI improvement plans, the Democracy Lab just lately surveyed 1, 545 individuals from 4 international locations (Brazil, Germany, Spain, and america) to get their ideas on a few of these considerations.

You may take a look at the total report right here, however on this submit, we’ll check out a number of the key notes.

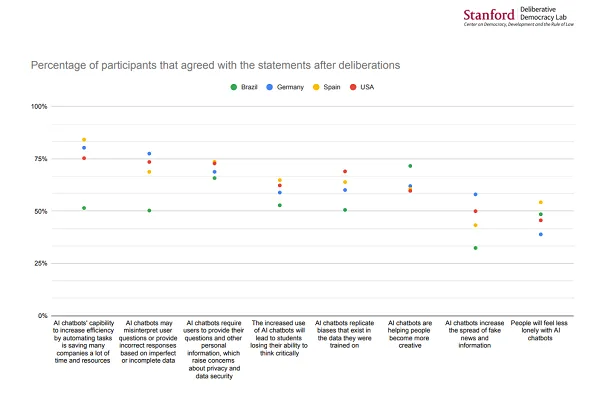

First off, the examine reveals that, generally, most individuals see potential effectivity advantages in AI use, however much less so in companionship.

That is an attention-grabbing overview of the overall pulse of AI response, throughout a variety of key parts.

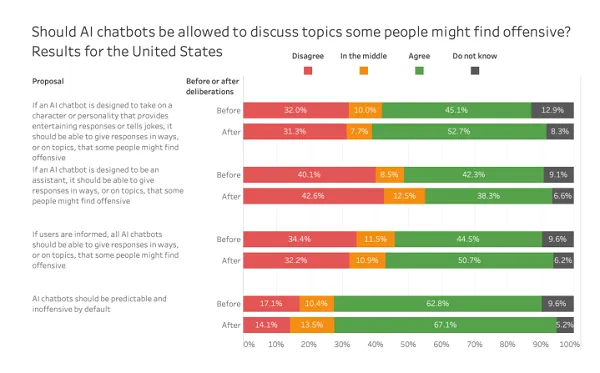

The examine then requested extra particular questions on AI companions, and what limits needs to be positioned on their use.

On this question, most individuals indicated that they’re okay with chatbots addressing doubtlessly offensive subjects, although round 40% had been in opposition to it (or within the center).

Which is attention-grabbing contemplating the broader dialogue of free speech within the fashionable media. It could appear, given the deal with such, that most individuals would view this as a much bigger concern, however the break up right here signifies that there’s no true consensus on what chatbots ought to be capable of handle.

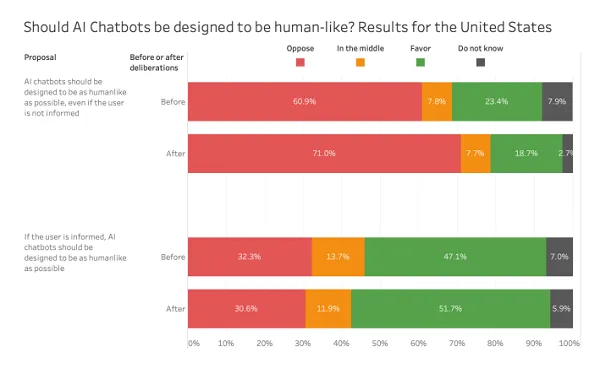

Members had been additionally requested whether or not they suppose that AI chatbots needs to be designed to duplicate people.

So there’s a major degree of concern about AI chatbots enjoying a human-like position, particularly if customers should not knowledgeable that they’re partaking with an AI bot.

Which is attention-grabbing throughout the context of Meta’s plan to unleash a military of AI bot profiles throughout its apps, and have them interact on Fb and IG like they’re actual folks. Meta hasn’t offered any particular data on how this could work as but, nor what sort of disclosures it plans to show for AI bot profiles. However the responses right here would counsel that individuals need to be clearly knowledgeable of such, versus making an attempt to move these off as actual folks.

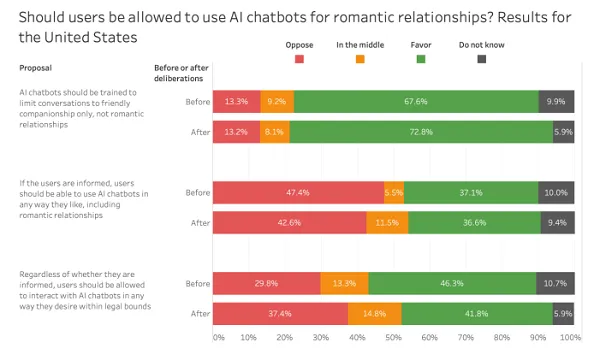

Additionally, folks don’t appear notably comfy with the concept of AI chatbots as romantic companions:

The outcomes right here present that the overwhelming majority of persons are in favor of restrictions on AI interactions, in order that customers (ideally) don’t develop romantic relationships with bots, whereas there’s a reasonably even break up of individuals for and in opposition to the concept that folks can work together with AI chatbots nevertheless they need “inside authorized bounds.”

This can be a notably dangerous space, as famous, inside which we merely don’t have sufficient analysis on as but to make a name as to the psychological well being advantages or impacts of such. It looks as if this could possibly be a pathway to hurt, however perhaps, romantic involvement with an AI could possibly be helpful in lots of circumstances, in addressing loneliness and isolation.

However it’s one thing that wants ample examine, and shouldn’t be allowed, or promoted by default.

There’s a heap extra perception within the full report, which you’ll entry right here, elevating some key questions concerning the improvement of AI, and our growing reliance on AI bots.

Which is just going to develop, and as such, we want extra analysis and group perception into these parts to make extra knowledgeable decisions about AI improvement.