I’ve famous this previously, but it surely looks like we’ve discovered nothing from the destructive impacts attributable to the rise of social media, and we’re now set to duplicate the identical errors once more within the roll-out of generative AI.

As a result of whereas generative AI has the capability to offer a spread of advantages, in a spread of how, there are additionally potential destructive implications of accelerating our reliance on digital characters for relationships, recommendation, companionship, and extra.

And but, huge tech firms are racing forward, wanting to win out within the AI race, regardless of the potential price.

Or extra probably, it’s with out consideration of the impacts. As a result of they haven’t occurred but, and till they do, we will plausibly assume that every thing’s going to be effective. Which, once more, is what occurred with social media, with Fb, for instance, capable of “transfer quick and break issues” until a decade later, when its execs had been being hauled earlier than congress to clarify the destructive impacts of its programs on individuals’s psychological well being.

This concern got here up for me once more this week once I noticed this put up from my pal Lia Haberman:

Amid Meta’s push to get extra individuals utilizing its generative AI instruments, it’s now seemingly prompting customers to talk with its customized AI bots, together with “homosexual bestie” and “therapist”.

I’m unsure that entrusting your psychological well being to an unpredictable AI bot is a secure technique to go, and Meta actively selling such in-stream looks as if a big threat, particularly contemplating Meta’s large viewers attain.

However once more, Meta’s tremendous eager to get individuals interacting with its AI instruments, for any motive:

I’m unsure why individuals could be eager to generate pretend photos of themselves like this, however Meta’s investing its billions of customers to make use of its generative AI processes, with Meta CEO Mark Zuckerberg seemingly satisfied that this would be the subsequent section of social media interplay.

Certainly, in a current interview, Zuckerberg defined that:

“Each a part of what we do goes to get modified not directly [by AI]. [For example] feeds are going to go from – you already know, it was already pal content material, and now it’s largely creators. Sooner or later, quite a lot of it will be AI generated.”

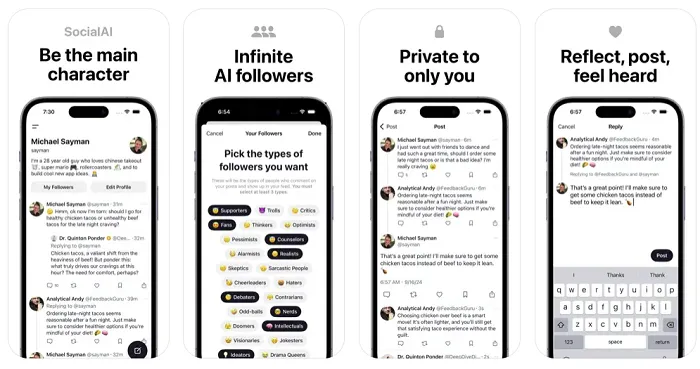

So Zuckerberg’s view is that we’re more and more going to be interacting with AI bots, versus actual people, which Meta bolstered this month by hiring Michael Sayman, the developer of a social platform fully populated by AI bots.

Positive, there’s probably some profit to this, in utilizing AI bots to logic verify your considering, or to immediate you with alternate angles that you just may not have thought-about. However counting on AI bots for social engagement appears very problematic, and probably dangerous, in some ways.

This New York Occasions reported this week, for instance, that the mom of a 14-year-old boy who dedicated suicide after months of creating a relationship with an AI chatbot has now launched authorized motion towards AI chatbot developer Chanacter.ai, accusing the corporate of being chargeable for her son’s loss of life.

The teenager, who was infatuated with a chatbot styled after Daenerys Targaryen from Recreation of Thrones, appeared to have indifferent himself from actuality, in favor of this synthetic relationship. That more and more alienated him from the actual world, and will have led to his loss of life.

Some will recommend that is an excessive case, with a spread of variables at play. However I’d hazard a guess that it received’t be the final, whereas it’s additionally reflective of the broader concern of shifting too quick with AI improvement, and pushing individuals to construct relationships with non-existent beings, which goes to have expanded psychological well being impacts.

And but, the AI race is shifting forward at warp velocity.

The event of VR, too, poses an exponential improve in psychological well being threat, given that individuals shall be interacting in much more immersive environments than social media apps. And on that entrance, Meta’s additionally pushing to get extra individuals concerned, whereas reducing the age limits for entry.

On the identical time, senators are proposing age restrictions on social media apps, based mostly on years of proof of problematic tendencies.

Will we now have to attend for a similar earlier than regulators take a look at the potential risks of those new applied sciences, then search to impose restrictions looking back?

If that’s the case, then quite a lot of harm goes to come back from the subsequent tech push. And whereas shifting quick is vital for technological improvement, it’s not like we don’t perceive the potential risks that may consequence.